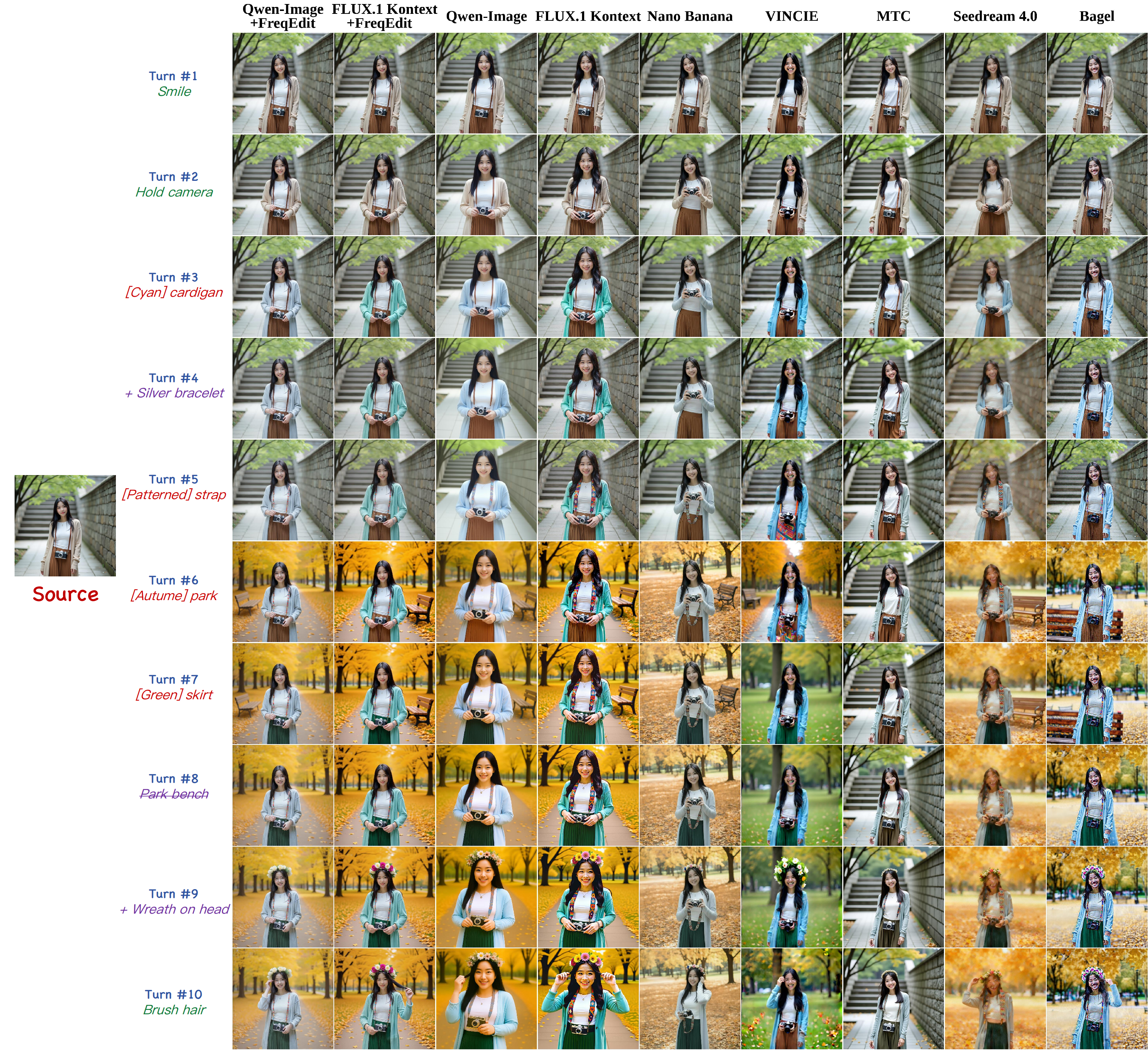

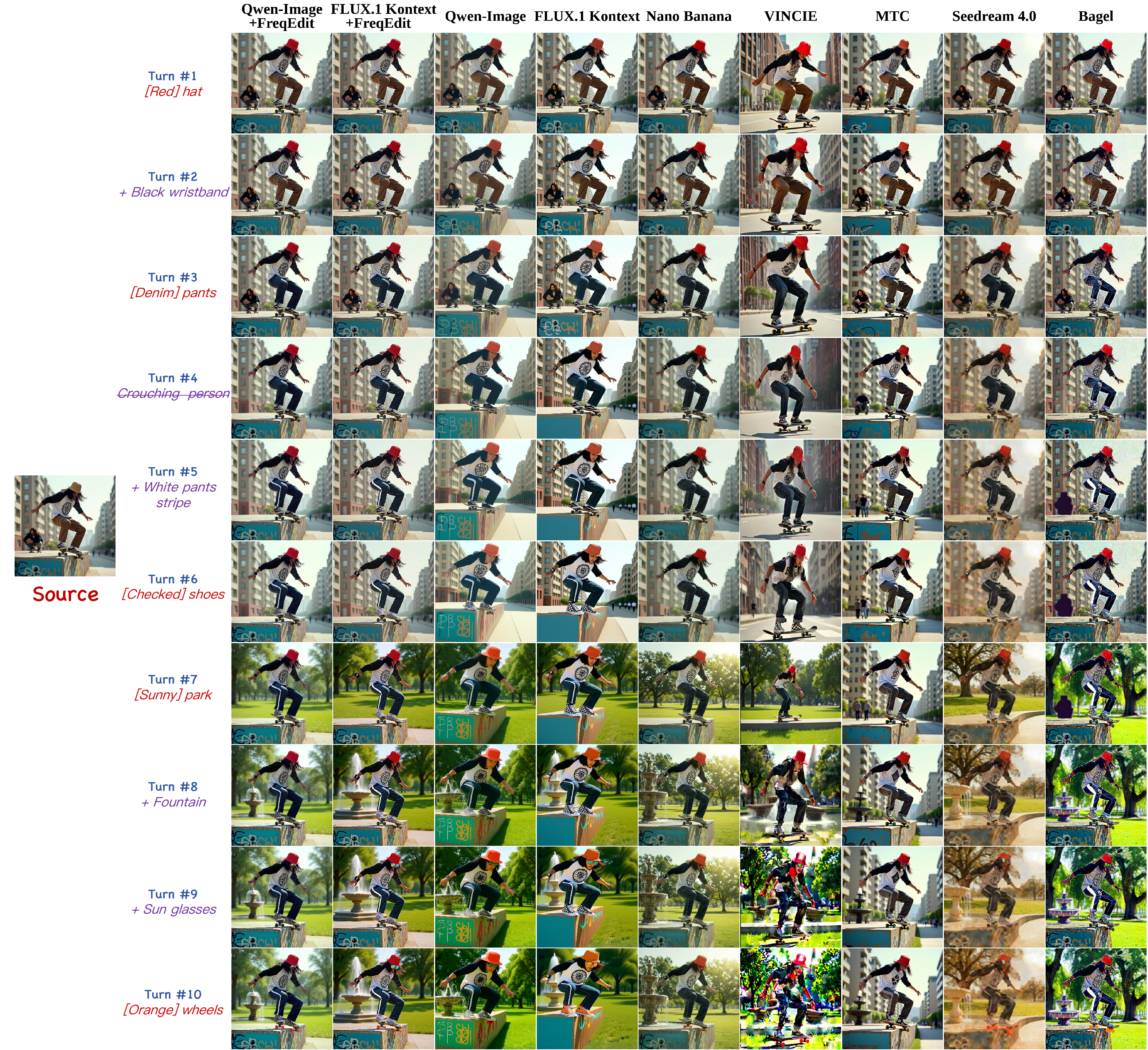

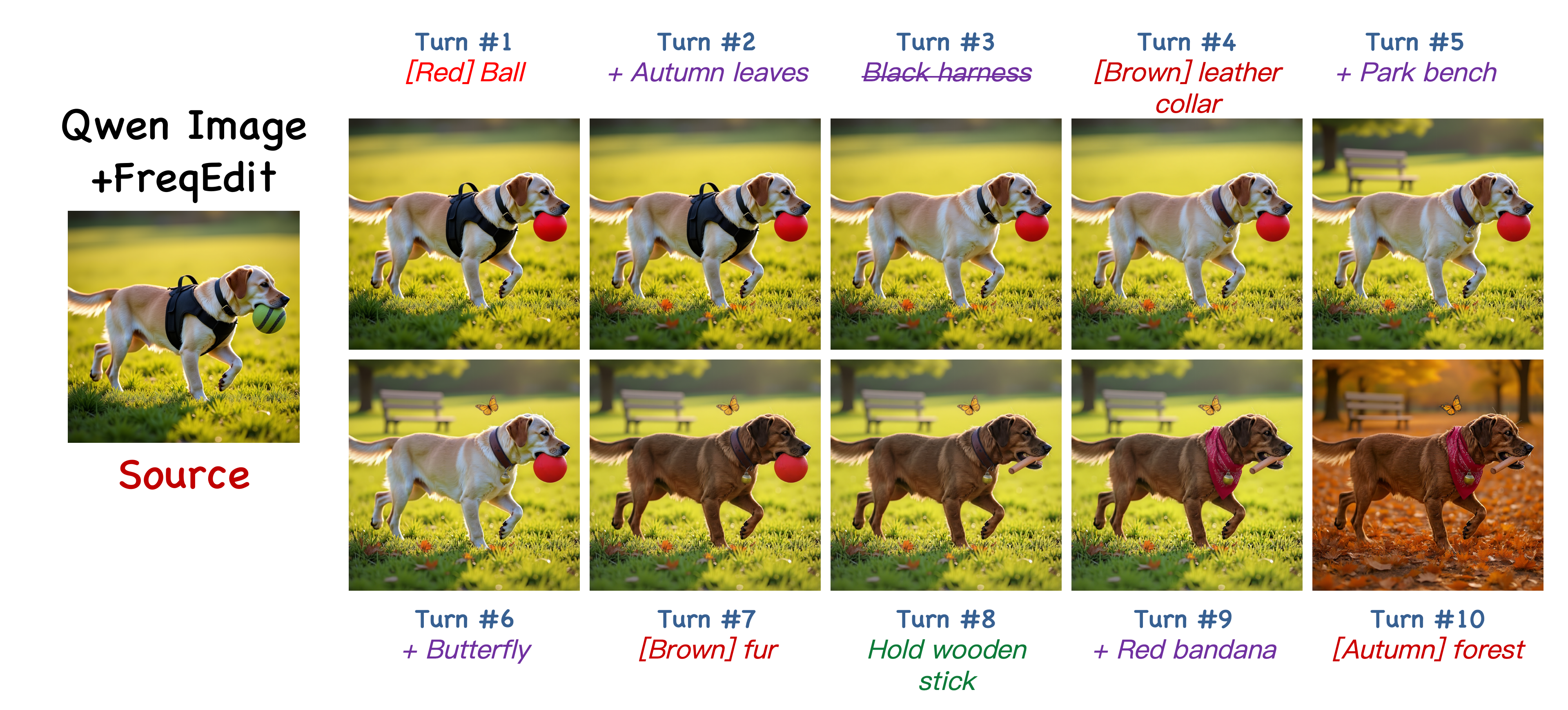

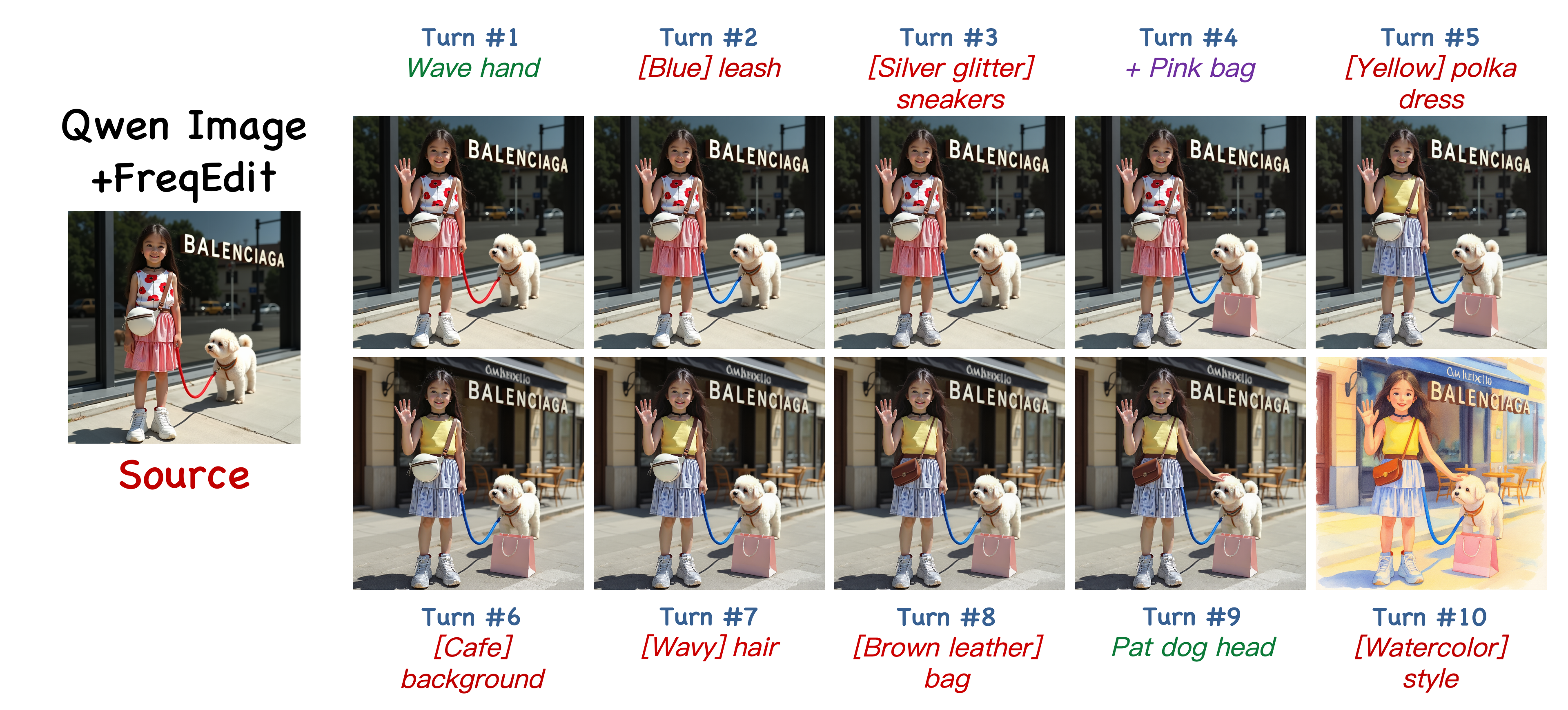

Instruction-based image editing through natural language has emerged as a powerful paradigm for intuitive visual manipulation. While recent models achieve impressive results on single edits, they suffer from severe quality degradation under multi-turn editing. Through systematic analysis, we identify progressive loss of high-frequency information as the primary cause of this quality degradation. We present FreqEdit, a training-free framework that enables stable editing across 10+ consecutive iterations. Our approach comprises three synergistic components: (1) high-frequency feature injection from reference velocity fields to preserve fine-grained details, (2) an adaptive injection strategy that spatially modulates injection strength for precise region-specific control, and (3) a path compensation mechanism that periodically recalibrates the editing trajectory to prevent over-constraint. Extensive experiments demonstrate that FreqEdit achieves superior performance in both identity preservation and instruction following compared to seven state-of-the-art baselines. Code will be made publicly available.

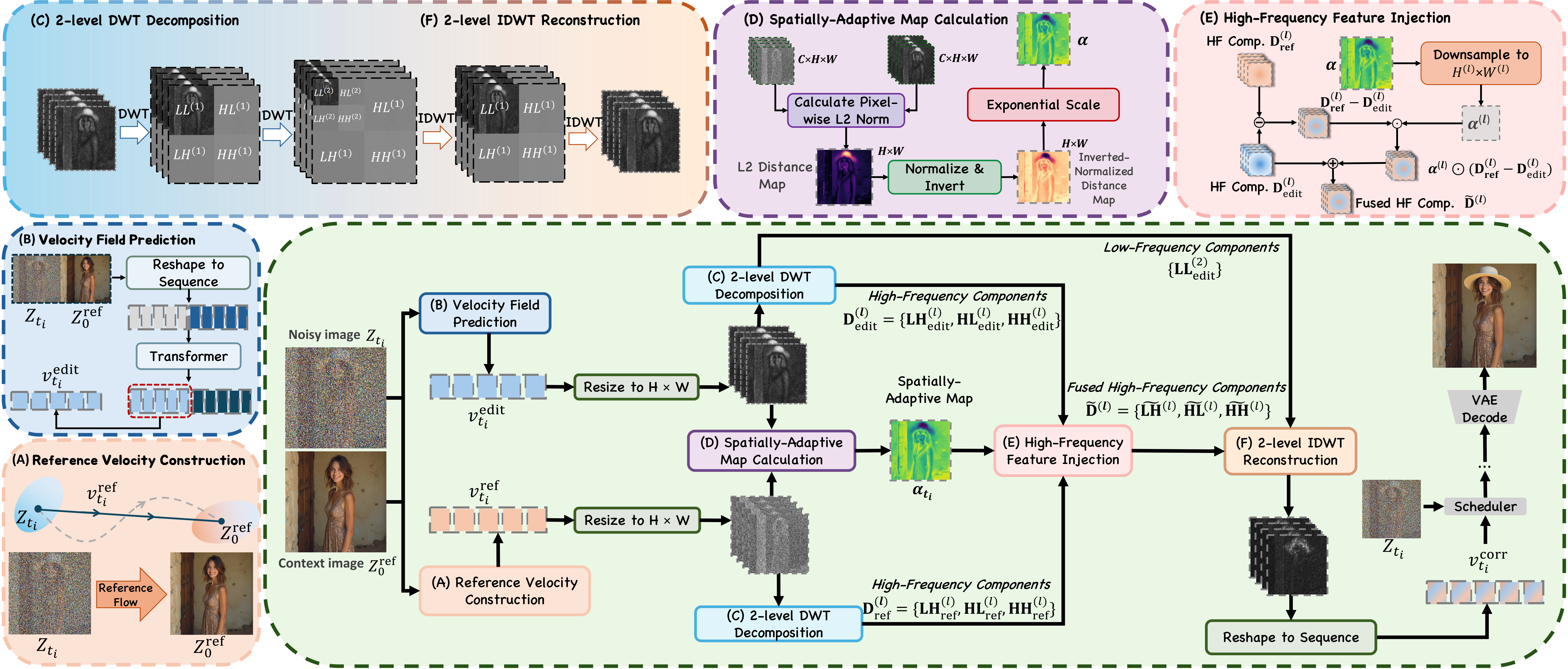

We introduce FreqEdit, a training-free framework that preserves high-fidelity visual consistency and instruction following across extended multi-turn editing sessions. Our core strategy is to strategically reinforce high-frequency information during early denoising steps, when these components are most vulnerable to degradation. This vulnerability arises because early denoising steps primarily establish low-frequency global structure, making high-frequency details susceptible to suppression.

Specifically, we first construct a reference velocity field from the context image (i.e., the input image for the current editing turn) containing rich high-frequency details, and then inject these high-frequency components into the editing velocity field to counteract progressive degradation. However, naively applying uniform injection would either overly constrain edited regions or compromise the integrity of unedited areas. We therefore propose an adaptive injection strategy that spatially modulates the reference strength based on automatically predicted editing masks, ensuring that unedited regions maintain their original fidelity while edited regions retain sufficient flexibility for meaningful transformations. Furthermore, continuous high-frequency injection can overly restrict the generation process, potentially leading to incomplete or suboptimal edits. To address this, we introduce a path compensation mechanism that progressively recalibrates the editing trajectory, dynamically steering it back toward the intended editing direction.

@article{liao2025freqedit,

title={FreqEdit Preserving High-Frequency Features for Robust Multi-Turn Image Editing},

author={Yucheng Liao, Jiajun Liang, Kaiqian Cui, Baoquan Zhao, Haoran Xie, Wei Liu, Li Qing, Xudong Mao},

journal={arXiv preprint arXiv:2512.01755},

year={2025}

}